Authors

Salvatore Aurigemma & Raymond R. Panko

Abstract

Spreadsheets are widely used in the business, public, and private sectors. However, research and practice has generally shown that spreadsheets frequently contain errors.

Several researchers and vendors have proposed the use of spreadsheet static analysis programs (SAPs) as a means to augment or potentially replace the manual inspection of spreadsheets for errors. SAPs automatically search spreadsheets for indications of certain types of errors and present these indications to the inspector. Despite the potential importance of SAPs, their effectiveness has not been examined.

This study explores the effectiveness of two widely fielded SAPs in comparison to manual human inspection on a set of naturally generated quantitative errors in a simple, yet realistic, spreadsheet model.

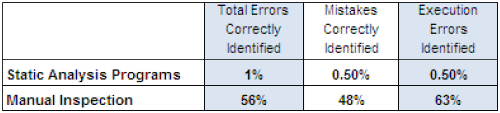

The results showed that while manual human inspection results for this study were consistent with previous research in the field, the performance of the static analysis programs at detecting natural errors was very poor for every category of spreadsheet errors.

Sample

Attempts were made to find errors in a corpus of 43 spreadsheets. These spreadsheets contained 97 natural errors.

Overall, the 43 human inspectors found 56% of the total natural errors; only 1% of the total errors were properly identified by the two static analysis programs.

Automated error tagging appears to be a limited method for screening spreadsheets during the development process, whether used to augment or potentially replace human error inspection.

Given that spreadsheet developers have shown overconfidence in their design effectiveness, use of SAPs that perform so poorly in detecting errors could exacerbate a developer's false sense of spreadsheet accuracy simply because they find so few actual errors.

Publication

2014, Journal of Organizational and End User Computing, Volume 26, Number 1, January, pages 47-65

Full article

Also see

The detection of human spreadsheet errors by humans versus inspection (auditing) software